Building In Public 2: Startup = A Bunch of Simultaneous Experiments 👩🔬

Growing up my favourite subject was science. I was (and remain) a very curious kid. I tend to ask a lot of questions and love to understand why. I particularly liked the concept of the scientific method — hypothesis -> design -> test -> repeat. It is brilliant. A logical and methodical way to acquire and build new knowledge.

When I got into the startup world I (of course) read the Lean Startup by Eric Reis. This reintroduced the scientific method to me in the form of validated learning. I kept the learnings from the Lean Startup in the back of my mind as we hacked along at the start of Steppen. However, somewhere along the way we forgot about it. We began spending a long time (a week which is long in early stage) implementing features we thought made sense with little prior testing and even less follow up after implementation.

We ended up implementing a bunch of features (namely contact access, location access, invites and tag searching…) because some social apps had them, so we assumed, ‘Hey they have these features, we should have them too.” These features are among Steppen’s theoretically best and most complete features but are also the least used. After taking some time to reflect on product progress, we realised a lot of the major features we had worked hard to implement had been ‘failures’ as no one was seeing them or wanted to use them. From a personal perspective it was a humbling realisation. As the product person I was the one who had been prioritising what to built and I had been wrong. It sucked.

But after a goal setting session with our mentor Simon the team regained focus. We had forgotten the basic startup principle. Everything is a test. Do the hackiest thing you can do to get the information you need to then decide if it is worth you investing more time doing this. It was time to run a bunch of product experiments!

I’ll walk you through how we now approach the product (and the business as a whole) using (our now most successful feature implementation) recommended workouts.

Approach to Recommended Workouts

- Hypothesis: If people want to see workouts suited to their goals, then recommending workouts and changing them frequently will increase users returning to the app

- Why? To easily connect users to content they want to see (directly related to the product we are solving, check out more on the problem we are solving at Steppen, here)

- Who is this for? Browsers (users who like scanning the app for new fitness content)

- Expected behaviour? Users select a workout recommended for them

- Assumptions being made: People are primarily using Steppen to find workouts. That initial information collected will be enough to provide a decent workout suggestion.

- Key metric: Number of clicks on suggested workouts and % of active users clicking on recommended workouts

- Success means: 30–40% of users clicking on suggested workouts to check them out

- Step 1 (single day implementation): Ask uses what is their goal, based on the users goal suggest 3 workouts (have a list of workouts we recommend and rotate through this list)

- Future Steps: Collect more information to curate workouts more, better automate the recommendation process, update workouts daily, receive feedback from users about the quality of our recommendations, enable users to edit their preferences

More Generally the Product Framework

While I used recommended workouts to highlight the framework we now use it is important to realise that framework is applied for all product experimentation. Below I explain the importance and what each dot point leads us to consider.

- 👩🔬 Hypothesis: grounds feature in a use case (if) and action (then)

- 🤷♀️ Why? what is the user problem we are trying to solve. Thinking in problems + solution rather than features.

- 💁🏽♀️ Who is this for? leads to thinking about actual users and value they will derive (we always want to be user focused!)

- 👀 Expected behaviour? thinking about how those users will interact with this feature

- 🧐 Assumptions being made: recognition that there are things we assume- highlighting them leads us to question if they are fair assumptions and consider possible impact if these are assumptions are wrong.

- 📈 Key metrics: identifying what we will track to then determine if the results were as excepted. For us this part was particularly important as it leads to accountability and prompts reflection.

- 🚀 Success means: how do we know that this feature did or did not performed well. Important so we then can then learn.

- 1️⃣ Step 1: What is the fastest and most effective way we can test our hypothesis

- 🔮 Future Steps: To limit scope creep we clearly differentiate this from step 1.

Implementation of Recommended Workouts

Below is is the design for how recommended workouts look. After setting up their account, the user selects a goal, based on that goal we recommend them workouts. We add 3 new workouts suggestions every 2 days.

Results of Experiment: Recommended Workouts

Implementing this feature took a single day meaning we could start getting data on this experiment nearly instantly. So what were the results of this experiment….Below I have included two graphs.

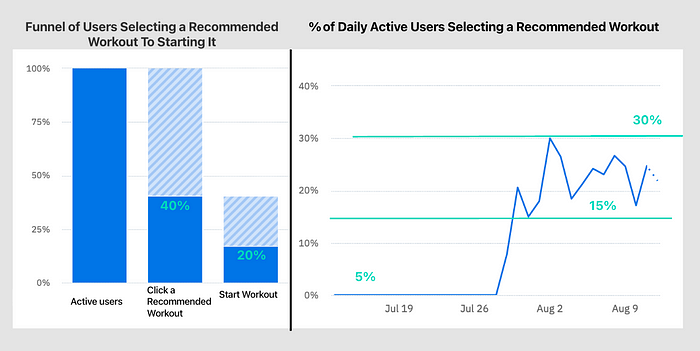

- Funnel of (total) users moving from selecting a recommended workout (40%) to starting that workout (20%)

- % of daily active users who select a recommended workout, this number fluctuates between 15% and 30%.

Comparing these results to what we had recorded success to mean- “ 30–40% of users clicking on suggested workouts to check them out.” We were spot on! Future aspects to test, uncover and optimise for:

- Increase conversion from clicking a recommended workout to completing it

- Understand types of users who are selecting recommended workouts vs not

- Understand fluctuations in clicks on recommendations

Experimenting With the Entire Business

While the example I have detailed is looking at applying an experimental framework within the product, we now apply this mindset to all aspects of our business — marketing, retention & content acquisition. It is a discipline we are still learning and getting into. For sure there are still times we steam ahead without first thinking why, but as a team we do a good job of holding one another accountable to this experiment based approach.

Already I have found this framework much better in grounding us in getting things out fast and iterating to perfect. It has motivated the entire team to be hungry for information and moved the emphasis on learning not tasks (or features). Let me know your thoughts and happy to disucss further!

Until next month friends (and in the meantime there will be many more tests…),

— Cara 🚀